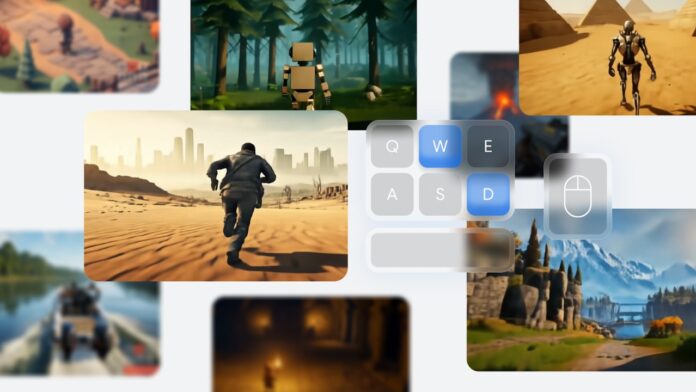

Google has announced the launch of Google Genie 2, a large-scale foundation world model capable of generating an endless variety of action-controllable, playable 3D environments for training and evaluating embodied agents. Based on a single prompt image, it can be played by a human or AI agent using keyboard and mouse inputs.

“Games play a key role in the world of artificial intelligence (AI) research. Their engaging nature, unique blend of challenges, and measurable progress make them ideal environments to safely test and advance AI capabilities,” said Google. “Genie 2 could enable future agents to be trained and evaluated in a limitless curriculum of novel worlds.”

What is Google Genie 2?

While Genie 1 could create a diverse array of 2D worlds, Google Genie 2 represents a significant leap forward in generality, as it can generate a vast diversity of rich 3D worlds. Genie 2 is a world model, meaning it can simulate virtual worlds, including the consequences of taking any action (e.g. jump, swim, etc.).

It was trained on a large-scale video dataset and, like other generative models, demonstrates various emergent capabilities at scale, such as object interactions, complex character animation, physics, and the ability to model and thus predict the behavior of other agents.

Genie 2 can essentially convert an image into a playable 3D environment. This means anyone can describe a world they want in text, select their favorite rendering of that idea, and then step into and interact with that newly created world (or have an AI agent be trained or evaluated in it). At each step, a person or agent provides a keyboard and mouse action, and Genie 2 simulates the next observation. Genie 2 can generate consistent worlds for up to a minute, with the majority of examples shown lasting 10-20s.

Genie 2 responds intelligently to actions taken by pressing keys on a keyboard, identifying the character and moving it correctly. For example, the model has to figure out that arrow keys should move the robot and not the trees or clouds.

Further, Genie 2 is capable of remembering parts of the world that are no longer in view and then rendering them accurately when they become observable again. Genie 2 generates new plausible content on the fly and maintains a consistent world for up to a minute.

Read More: Google Releases Undo Device Backup Setting in Google Photos

Genie 2 can create different perspectives, such as first-person view, isometric views, or third person driving videos. It further models various object interactions, such as bursting balloons, opening doors, and shooting barrels of explosives. The model has learned how to animate various types of characters doing different activities, and can also model non-playable characters (NPCs) and even complex interactions with them.

It can further model smoke effects, water effects, gravity, point and directional lighting, reflections, bloom, and even coloured lighting. Genie 2 can also be prompted with real world images, where it can model grass blowing in the wind or water flowing in a river. The list of its abilities is quite large and doesn’t end here.

“Genie 2 shows the potential of foundational world models for creating diverse 3D environments and accelerating agent research,” as per Google. The company notes that this research direction is in its early stages and it looks forward to continuing to improve Genie’s world generation capabilities in terms of generality and consistency.