Update 06/12/2024: The article has been updated with the latest announcements below.

OpenAI is set to launch a 12-day “shipmas” event starting today, December 5, unveiling new features, products, and demos. There will be 12 live streams that will be held on each day and every one of them will be accompanied by new launches. Here’s everything to know about 12 days of OpenAI.

As announced by OpenAI and its CEO, the 12 Days of OpenAI event begins December 5 at 10AM Pacific time (11:30PM IST). Among the anticipated announcements are Sora, OpenAI’s long-awaited text-to-video AI tool, and a new reasoning model, according to sources familiar with the company’s plans, as reported by The Verge.

“Each weekday, we will have a livestream with a launch or demo, some big ones and some stocking stuffers. We’ve got some great stuff to share, hope you enjoy! merry christmas!,” said Sam Altman on X. While the announcements haven’t been confirmed as to what exactly they are, 12 Days of OpenAI will most likely consist of the debut of Sora.

Read More: OpenAI Sora: Top 5 Alternatives

OpenAI CEO Mira Murati told The Wall Street Journal earlier this March that Sora will debut publicly by the end of the year and 12 Days of OpenAI seems to be the apt event to take off the curtains from AI tool. Sora was unveiled back in February.

Sora boasts a profound comprehension of language, enabling it to accurately interpret prompts and create compelling characters that reflect vibrant emotions. The model not only comprehends user prompts but also grasps the physical context in which they exist. Sora has been in a private testing phase throughout 2024. It was leaked by artists who were testing it a few weeks back, stating that OpenAI was using them for supposed “unpaid R&D and PR.”

We’ll be updating this article once the announcements begin coming in, so stay tuned.

12 Days of OpenAI: All Announcements

December 5 – Day 1

ChatGPT Pro, OpenAI o1

OpenAI has announced the launch of o1, its latest AI model which the company describes as the “smartest model in the world.” It’s smarter, faster, and packs more features (eg multimodality) than o1-preview. Alongside o1, the company introduced ChatGPT Pro, a $200 (approx Rs 16,900) monthly plan that enables scaled access to the best of OpenAI’s models and tools.

This plan includes unlimited access to OpenAI o1, as well as to o1-mini, GPT-4o, and Advanced Voice. It also includes o1 pro mode, a version of o1 that uses more compute to think harder and provide even better answers to the hardest problems. In the future, OpenAI expects to add more powerful, compute-intensive productivity features to this plan.

ChatGPT Pro provides a way for researchers, engineers, and other individuals who use research-grade intelligence daily to “accelerate their productivity and be at the cutting EDGE of advancements in AI.” ChatGPT Pro provides access to a version of the company’s most intelligent model that thinks longer for the most reliable responses. OpenAI claims that in evaluations from external expert testers, o1 pro mode produces more reliably accurate and comprehensive responses, especially in areas like data science, programming, and case law analysis.

Compared to both o1 and o1-preview, o1 pro mode performs better on challenging ML benchmarks across math, science, and coding. To emphasize the primary strength of the o1 Pro mode—enhanced reliability—OpenAI adopts a more rigorous evaluation standard. A model is deemed to have successfully solved a question only if it answers correctly in all four attempts (“4/4 reliability”), rather than just once.

Pro users can access this functionality by selecting “o1 pro mode” in the model picker and asking a question directly. Since answers will take longer to generate, ChatGPT will display a progress bar and send an in-app notification if you switch away to another conversation.

The company will continue adding capabilities to Pro over time to unlock more compute-intensive tasks. It’ll also continue to bring many of these new capabilities to its other subscribers.

December 6 – Day 2

Reinforcement Fine-tuning

OpenAI, on the second day of “12 Days of OpenAI” Shipmas event, the company announced the expansion of its Reinforcement Fine-Tuning Research Program to enable developers and machine learning engineers to create expert models fine-tuned to excel at specific sets of complex, domain-specific tasks.

The new model customization technique, called Reinforcement Fine-tuning, enables developers to customize OpenAI’s models using dozens to thousands of high quality tasks and grade the model’s response with provided reference answers. This technique reinforces how the model reasons through similar problems and improves its accuracy on specific tasks in that domain.

OpenAI encourages research institutes, universities, and enterprises to apply, particularly those that currently execute narrow sets of complex tasks led by experts and would benefit from AI assistance. OpenAI’s Reinforcement Fine-Tuning has shown great potential in fields like Law, Insurance, Healthcare, Finance, and Engineering. This is because this technique works particularly well with tasks that have a clear “right” answer, the kind that most experts in those fields would generally agree on.

As part of the research program, you will get access to OpenAI’s Reinforcement Fine-Tuning API in alpha to test this technique on your domain-specific tasks. You will be asked to provide feedback to help the AI company improve the API ahead of a public release.

December 9 – Day 3

Sora

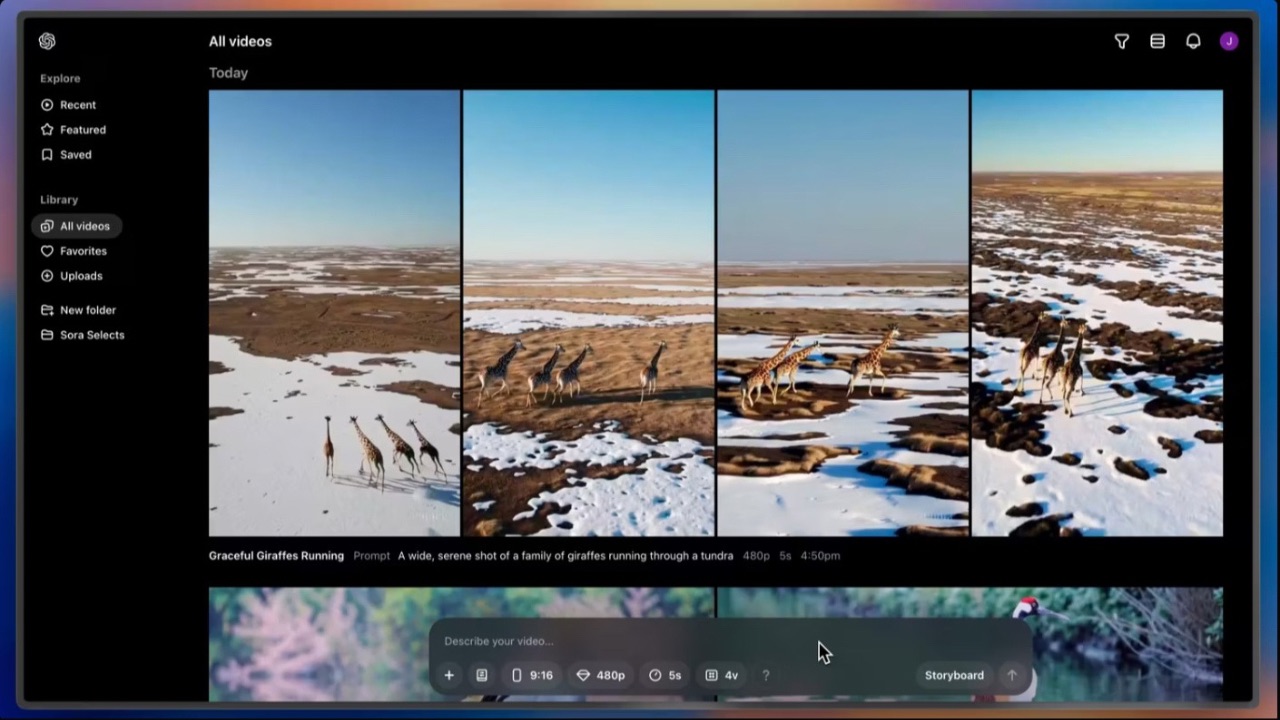

An announcement everyone expected, Sora was launched by OpenAI for the general public on Day 3 of its Shipmas event. While Sora was introduced earlier this year, it was available only to a select group of testers. OpenAI has developed a new version of Sora—Sora Turbo— that is significantly faster than the model it previewed in February. It is releasing that model today as a standalone product at Sora.com to ChatGPT Plus and Pro users.

With Sora, users can generate videos up to 1080p resolution, up to 20 sec long, and in widescreen, vertical, or square aspect ratios. You can bring your assets to extend, remix, and blend, or generate entirely new content from text.

OpenAI has further developed new interfaces to make it easier to prompt Sora with text, images, and videos. There’s a storyboard tool that lets users precisely specify inputs for each frame. Open AI will also have Featured and Recent feeds in Sora that are constantly updated with creations from the community.

Sora is included as part of the user’s Plus account at no additional cost. You can generate up to 50 videos at 480p Resolution or fewer videos at 720p each month.

For those who want more Sora, the Pro plan includes 10x more usage, higher resolutions, and longer durations. OpenAI notes that it is working on tailored pricing for different types of users, which it plans to make available early next year.

The version of Sora OpenAI has deployed has many limitations, the AI company conveys. “It often generates unrealistic physics and struggles with complex actions over long durations. Although Sora Turbo is much faster than the February preview, we’re still working to make the technology affordable for everyone,” said OpenAI.

All Sora-generated videos come with C2PA metadata, which will identify a video as coming from Sora to provide transparency, and can be used to verify origin. OpenAI admits that while some of the measures it has taken may be imperfect, it has still added safeguards like visible watermarks by default, and built an internal search tool that uses technical attributes of generations to help verify if content came from Sora.

As of today, OpenAI is blocking particularly damaging forms of abuse, such as child sexual abuse materials and sexual deepfakes. Uploads of people will be limited at launch, but OpenAI intends to roll the feature out to more users as it refines its deepfake mitigations.

December 10 – Day 4

Canvas Availability for All

Back in October this year, OpenAI unveiled Canvas, a new interface for working with ChatGPT on writing and coding projects that go beyond simple chat. Canvas opens in a separate window, allowing the user and ChatGPT to collaborate on a project. While it was available only to the paid ChatGPT users, OpenAI has announced that it is now rolling out for all users, including free ones.

Canvas was built with GPT-4o and can be manually selected in the model picker while in beta. Availability for everyone means that Canvas is now out of its beta phase. With Canvas, ChatGPT can better understand the context of what you’re trying to accomplish. You can highlight specific sections to indicate exactly what you want ChatGPT to focus on. Like a copy editor or code reviewer, it can give inline feedback and suggestions with the entire project in mind.

You control the project in Canvas. You can directly edit text or code. There’s a menu of shortcuts for you to ask ChatGPT to adjust writing length, debug your code, add emojis to your content, and quickly perform other useful actions. You can also restore previous versions of your work by using the back button in Canvas.

Canvas opens automatically when ChatGPT detects a scenario in which it could be helpful. You can also include “use canvas” in your prompt to open canvas and use it to work on an existing project. Canvas can also help you code, with shortcuts including:

Coding shortcuts include:

- Review code: ChatGPT provides inline suggestions to improve your code.

- Add logs: Inserts print statements to help you debug and understand your code.

- Add comments: Adds comments to the code to make it easier to understand.

- Fix bugs: Detects and rewrites problematic code to resolve errors.

- Port to a language: Translates your code into JavaScript, TypeScript, Python, Java, C++, or PHP.

Canvas now also supports custom GPTs, so you can add a collaborative interface to your custom AIs.

December 11 – Day 5

ChatGPT with Apple Intelligence

On Day 5 of the event, OpenAI demoed ChatGPT in Apple devices that’s integrated with Apple Intelligence. While the ability to do so was announced months back by Apple and was available in beta, the official release for the public where Siri can talk to ChatGPT came yesterday with the iOS 18.2 release.

Siri can tap into ChatGPT’s expertise when it’s needed. Users are asked before any questions are sent to ChatGPT, along with any documents or photos, and Siri then presents the answer directly. The model Siri will be leveraging will be the latest GPT-4o. For those who choose to access ChatGPT, Apple says their IP addresses will be hidden, and OpenAI won’t store requests.

December 12 – Day 6

ChatGPT Gets Video Input Support with Screenshare, Santa Mode also Announced

OpenAI is halfway through its “12 Days of OpenAI” Shipmas event and on Day 6, it announced that ChatGPT’s Advanced Audio mode now supports Video Input, which in other words means that ChatGPT now has vision. Using the ChatGPT app, users subscribed to ChatGPT Plus, Team, or Pro subscriptions can point their phones at objects in the real world and have ChatGPT respond in real-time.

This feature has been delayed multiple times in the past but is finally released to the public seven months after OpenAI first demoed it, even though the company said it would roll out in a “few weeks” back then.

Furthermore, through the Screenshare ability, the Advanced Voice Mode with vision can also understand what’s on the user’s device’s screen. It can explain various settings menus or give suggestions on a math problem.

The rollout of Advanced Voice Mode with Vision has already begun on Thursday and is expected to be completed within a week. However, not all users will gain immediate access. According to OpenAI, ChatGPT Enterprise and Edu subscribers will need to wait until January for the feature, while there is no set timeline for its availability in certain regions including the EU, Switzerland, Iceland, Norway, and Liechtenstein.

Finally, just in time for Christmas, OpenAI has also announced “Santa Mode”, where users can apply Santa’s voice as a preset voice in ChatGPT. One can find it by tapping or clicking the snowflake icon in the ChatGPT app next to the text input box.

December 13 – Day 7

ChatGPT Projects

Projects provide a new way to group files and chats for personal use, simplifying the management of work that involves multiple chats in ChatGPT. Projects keep chats, files, and custom instructions in one place.

Conversations in Projects support the following features:

- Canvas

- Advanced data analysis

- DALL-E

- Search

Currently, connectors to add files from Google Drive or Microsoft OneDrive are not supported.

You can delete your project by selecting the dots next to your Project name and selecting Delete Project. This will delete your files, conversations, and custom instructions in the Project. Once they are deleted, they cannot be recovered. You can also set Custom Instructions in your Project by selecting Add Instructions on your Project page. You can revisit this button at any time to reference or update your Instructions.

Instructions set in your Project will not interact with any conversations outside of your Project and will supersede custom instructions set in your ChatGPT account.

December 16 – Day 8

Improvements to ChatGPT Search

On Day 8, OpenAI announced improvements for ChatGPT Search, making it faster overall, a better experience on mobile, and improving overall reliability. Then, OpenAI has integrated Advanced Voice Mode in ChatGPT Search so you can use search through your voice. Aside from that, ChatGPT search is now available to all logged-in users on all platforms in regions where ChatGPT is available.

December 17 – Day 9

OpenAI o1 and New Tools for Developers

On Day 9 of “12 Days of OpenAI” event, the company introduced more capable models, new tools for customization, and upgrades that improve performance, flexibility, and cost-efficiency for developers building with AI. This includes:

- New Go and Java SDKs available in beta.

- OpenAI o1 in the API, with support for function calling, developer messages, Structured Outputs, and vision capabilities.

- Realtime API updates, including simple WebRTC integration, a 60% price reduction for GPT-4o audio, and support for GPT-4o mini at one-tenth of previous audio rates.

- Preference Fine-Tuning, a new model customization technique that makes it easier to tailor models based on user and developer preferences.

December 18 – Day 10

1-800-ChatGPT: Talk to ChatGPT via a Phone Call or through WhatsApp

On Day 11, OpenAI announced 1-800-ChatGPT, an experimental new launch to enable wider access to ChatGPT. You can now talk to ChatGPT via phone call or message ChatGPT via WhatsApp at 1-800-ChatGPT without needing an account. You can also start a conversation on WhatsApp by clicking this link or scanning a QR code. One can talk to 1-800-ChatGPT for 15 minutes per month for free, with a daily limit on WhatsApp messages. OpenAI says it may adjust usage limits based on capacity if needed. It provides a notice as you approach the limit and informs you when the limit has been reached.

December 19 – Day 11

Work with Apps on macOS

On the second last day of “12 Days of OpenAI” event, OpenAI announced that users can now work with apps while using Advanced Voice Mode. It’s ideal for live debugging in terminals, thinking through documents, or getting feedback on speaker notes. Further, now you can also search through all your previous conversations using keywords and phrases by clicking the search bar. The company has also added support for more note-taking and coding apps, including Apple Notes, Notion, Quip, and Warp

December 20 – Day 12

Early access for safety testing

On the final day of the ’12 Days of OpenAI’ event, OpenAI announced that it is inviting safety researchers to apply for early access to our next frontier models. This early access program complements the company’s existing frontier model testing process, which includes rigorous internal safety testing, external red teaming such as our Red Teaming Network and collaborations with third-party testing organizations, as well the U.S. AI Safety Institute and the UK AI Safety Institute.

The company will begin selections as soon as possible. Applications close on January 10, 2025.

With this final announcement, OpenAI’s Shipmas event comes to an end.